Introduction

A Naive Bayes classifier is a supervised machine learning model that’s used for classification task. As a classifier, it is used in face recognition, weather prediction, medical diagnosis, news classification, spam filtering, etc. Naive Bayes' Classifier is used for classification tasks in large datasets. This model can be modified with new training data without having to rebuild the samples. The crux of the classifier is based on the Bayes' theorem.

Assumption

It is called Naïve because it assumes that the occurrence of a certain feature is independent of the occurrence of other features. Such as if the fruit is identified on the bases of colour, shape and taste, then red, spherical and sweet fruit is recognised as an apple. Hence each feature individually contributes to identify that it is an apple without depending on each other.

How Naïve Bayes' Classifier works

Naive Bayes Classifier works on the principles of conditional probability as given by the Bayes' Theorem.

Bayes' Theorem gives the conditional probability of an event A given another event B has occurred. \[ P(A|B) = \frac{P(B|A).P(A)}{P(B)} \] Using Bayes theorem, we can find the probability of A happening, given that B has occurred. Here, B is the evidence and A is the hypothesis. The assumption made here is that the predictors/features are independent. That is presence of one particular feature does not affect the other. Hence it is called naive.

Types of Naive Bayes' Classifier

Multinomial Naive Bayes:

This is mostly used for document classification problem, i.e whether a document belongs to the category of sports, politics, technology etc. The features/predictors used by the classifier are the frequency of the words present in the document.

Bernoulli Naive Bayes:

This is similar to the multinomial naive bayes but the predictors are boolean variables. The parameters that we use to predict the class variable take up only values yes or no, for example if a word occurs in the text or not.

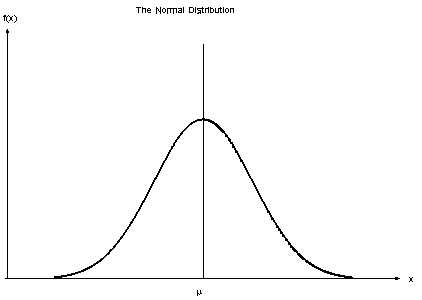

Gaussian Naive Bayes:

When the predictors take up a continuous value and are not discrete, we assume that these values are sampled from a gaussian distribution.

Since the way the values are present in the dataset changes, the formula for conditional probability changes to, \[ P(x_i | y) = \frac{1}{\sqrt{2\pi\sigma_y^2}}exp(-\frac{(x_i - \mu_y)^2}{2\sigma_y^2}) \]